A commentary by Jonas Scholz, FID-D

In addition to the ever-increasing use of operational data, data analysis is also growing more critical, as data-driven decision-making is becoming increasingly important in order to continue to compete in the rapidly changing logistics business. Recent crises, such as the coronavirus pandemic and Russia’s war of aggression on Ukraine, have shown that data can provide information that is vital for strategic decision-making. But day-to-day operational decisions can also benefit from data analysis, as has been demonstrated by our colleagues in the Embrace initiative, who have recently been conducting revision projection based on the mileage data of individual wagons.

To prepare ourselves well to meet new requirements, we have devised a range of procedures that will enable us to cope with increasing technical complexity.

The evolution of the system landscape

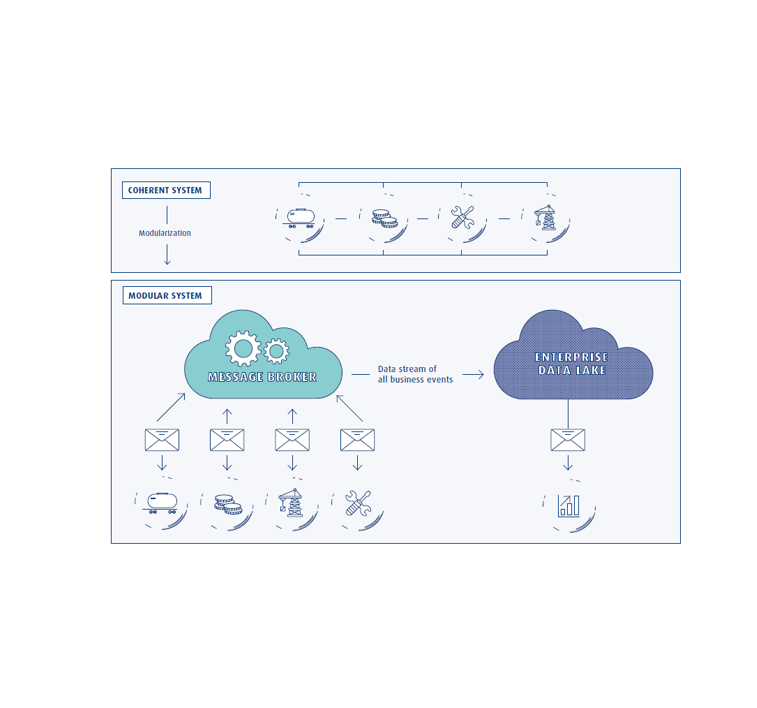

The critical importance of IT systems described above makes it clear that having rugged IT systems is extremely important for VTG’s work. The most effective way to boost the resilience of the system landscape is to break larger systems down into smaller parts. This dismantling process can be understood as modularization, which is about creating subsystems that can ideally run in an independent manner.

To maximize the independence of these subsystems, modularization is performed in parallel with the business processes. For example, as modularization progresses, dedicated systems will emerge that handle things like contract or fleet management. While this is happening, the changes will be made in the background, so that the impact on users will be minimal and stay hidden behind the familiar visual interfaces. In addition to enhancing stability, modularizing the systems will also simplify the development of new functions and can also have the added benefit of improving the speed of execution.

Although breaking our system landscape down into smaller parts increases the need to exchange information between the functional components, our need for failsafe performance makes it imperative that these parts are not directly connected to each other so as to minimize the impact of a potential malfunction. But how will this balancing act work?

„We are laying the technical groundwork to establish a future-proof system landscape and increase system stability while raising the level of data utilization and thereby strengthening VTG.“

Until now, our IT systems have mostly exchanged data directly with each other. In the event of a malfunction, this close linkage could mean that the problems of one system would also have a direct impact on the other. Instead of direct data exchange, we will now be relying more on an intermediary to communicate: the message broker. One can imagine this as a mailbox to which systems deposit messages (e.g., “A new wagon mileage has been calculated”) relevant to other systems. Other systems that need this information will be configured to retrieve messages from the mailbox and process the updates at their own pace. If one of the retrieval systems fails, this won’t have any cross-cutting impacts. Even if individual functions are no longer usable or only usable to a limited extent, the system as a whole will remain up and running.

The concept is already being applied in several ongoing development initiatives, including traigo, WAMOS!, HERMES and SAP.

The Enterprise Data Lake

From a data analytics perspective, this shift in how data is exchanged will result in new synergies. Over the past two years, a new data platform has been established that can record and analyze the data traffic of the message broker. The Enterprise Data Lake (or EDL, for short) will simplify big-picture data analysis by automatically importing and reusing existing data formats that flow through the message broker.

Thanks to this transition to message-based communication, the amount of data that can be retrieved from the Enterprise Data Lake will also be growing. Thus, in addition to creating benefits for system stability, the modularization of our IT systems will also ensure that the data can be used more easily for analytical purposes.

„The described measures for coping with the complexity involved in software development may have little immediate impact on your day-to-day work, but they will have a positive influence in the long term that will be felt by many. System stability will increase, and new opportunities will emerge in terms of how we can use data in our day-to-day operations for analysis-related purposes.“

Alongside its focus on simplifying the data connection, the Enterprise Data Lake is technically designed so as to allow even very large volumes of data to be processed. An average of over one billion messages are received over the course of a month, and tens of thousands of messages are received per second at peak times. Analyzing these large volumes of data is a technical challenge – as well as a key prerequisite when it comes to harnessing modern methods of data analysis.

One of these methods is so-called machine learning, which can be used to spot patterns in data by applying statistics. The detected patterns can then be used in a number of ways, such as to make predictions about the wear and tear on a wagon and its mileage over time. Utilizing this data can keep unscheduled downtime or unnecessary workshop visits of our assets to a minimum. The larger (and more detailed) the database, the better the quality of the prediction.

The Enterprise Data Lake is designed to make it as easy as possible to create a good and large database by creating synergies with the new software architecture and other analytical systems (e.g., the data warehouse).

The messages from the individual systems capturing the data stream of all business events flow via the message broker into the Enterprise Data Lake, which then prepares the messages and makes them analyzable. By employing a generic approach, the complexity of data integration for analysis-related purposes is kept to a minimum.

The measures presented for mastering complexity in software development may have little immediate impact on your everyday work, but they will have a positive influence in the long term that will be felt by many: System stability will increase and new opportunities to use data in day-to-day business for evaluation purposes will emerge. We are setting the technical course to achieve a future-proof system landscape, to increase system stability, and at the same time to increase the degree of data utilization, thus strengthening VTG.

Please do not hesitate to contact us if you have any questions or suggestions.